🎯 Mục tiêu bài học

Sau bài học này, học viên sẽ:

✅ Hiểu vấn đề Overfitting và cách phát hiện

✅ Nắm vững các phương pháp Cross-Validation

✅ Biết cách sử dụng GridSearchCV và RandomizedSearchCV

✅ Thực hành tuning hyperparameters

Thời gian: 4-5 giờ | Độ khó: Theory

📖 Bảng Thuật Ngữ Quan Trọng

| Thuật ngữ | Tiếng Việt | Giải thích đơn giản |

|---|---|---|

| Cross-Validation | Kiểm chéo | Chia data thành k fold để đánh giá model |

| K-Fold CV | K-Fold | Chia data thành k phần bằng nhau |

| Overfitting | Quá khớp | Model học thuộc data, không tổng quát |

| Underfitting | Chưa khớp | Model quá đơn giản |

| Bias-Variance | Sai lệch-Phương sai | Trade-off giữa độ lệch và độ biến đổi |

| GridSearchCV | Tìm lưới | Dò tất cả tổ hợp hyperparameter |

| RandomizedSearchCV | Tìm ngẫu nhiên | Sample ngẫu nhiên tổ hợp hyperparameter |

| Learning Curve | Đường học | Đồ thị score vs kích thước data |

Checkpoint

Bạn đã đọc qua bảng thuật ngữ? Hãy ghi nhớ chúng!

⚠️ Overfitting vs Underfitting

1. Overfitting vs Underfitting

1.1 Định nghĩa

| Trạng thái | Train Error | Test Error | Vấn đề |

|---|---|---|---|

| Underfitting | Cao | Cao | Model quá đơn giản |

| Good fit | Thấp | Thấp | Lý tưởng |

| Overfitting | Rất thấp | Cao | Model quá phức tạp |

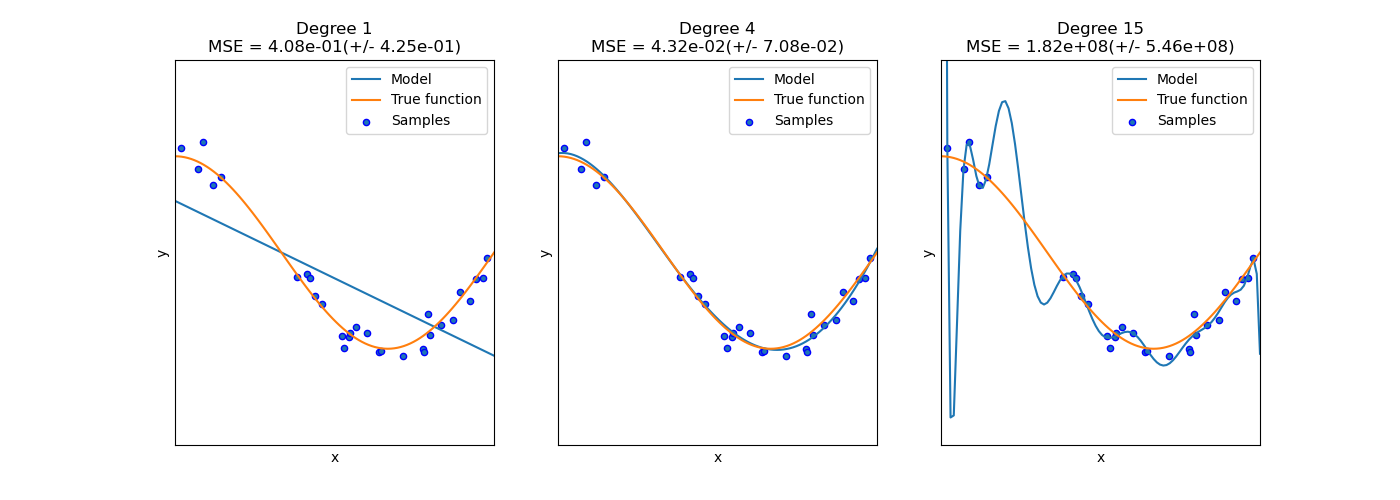

Hình: Underfitting (trái) - Good Fit (giữa) - Overfitting (phải)

1.2 Bias-Variance Tradeoff

| Thành phần | Ý nghĩa |

|---|---|

| Bias | Sai số do giả định đơn giản |

| Variance | Sai số do nhạy với training data |

| Irreducible Error | Sai số không thể giảm (noise) |

1.3 Cách phát hiện Overfitting

1# Train và đánh giá2model.fit(X_train, y_train)34train_score = model.score(X_train, y_train)5test_score = model.score(X_test, y_test)67print(f"Train Score: {train_score:.4f}")8print(f"Test Score: {test_score:.4f}")9print(f"Gap: {train_score - test_score:.4f}")1011# Nếu gap > 0.1 Có thể overfittingCheckpoint

Bạn có thể nhận biết dấu hiệu Overfitting từ kết quả train/test score không?

🔄 Cross-Validation

2. Cross-Validation

2.1 Tại sao can Cross-Validation?

Vấn đề voi train/test split:

- Test score phụ thuộc vào cách chia

- Variance cao giữa cac lan split khac nhau

Giải pháp: K-Fold Cross-Validation

2.2 K-Fold Cross-Validation

Thuật toán:

| Bước | Mô tả |

|---|---|

| 1 | Chia data thành K folds bằng nhau |

| 2 | Với mỗi fold i: Train trên K-1 folds, test trên fold i |

| 3 | Tính trung binh scores |

Vi du 5-Fold:

| Fold | Data 1 | Data 2 | Data 3 | Data 4 | Data 5 |

|---|---|---|---|---|---|

| 1 | Test | Train | Train | Train | Train |

| 2 | Train | Test | Train | Train | Train |

| 3 | Train | Train | Test | Train | Train |

| 4 | Train | Train | Train | Test | Train |

| 5 | Train | Train | Train | Train | Test |

Hinh: K-Fold Cross-Validation

2.3 Các loại Cross-Validation

| Method | Use case |

|---|---|

| K-Fold | General purpose |

| Stratified K-Fold | Classification, imbalanced |

| Leave-One-Out | Small dataset |

| Time Series Split | Time series data |

📝 Ví dụ tính toán thủ công

3. Ví dụ tính toán thủ công

3.1 5-Fold CV với Accuracy

Kết quả 5 folds:

- Fold 1: 0.85

- Fold 2: 0.82

- Fold 3: 0.88

- Fold 4: 0.84

- Fold 5: 0.86

Mean:

Standard Deviation:

Kết quả:

💻 Thực hành Cross-Validation

4. Thuc hanh Cross-Validation

4.1 Basic Cross-Validation

1from sklearn.model_selection import cross_val_score, KFold, StratifiedKFold2from sklearn.ensemble import RandomForestClassifier3import numpy as np45model = RandomForestClassifier(n_estìmators=100, random_state=42)67# Simple K-Fold CV8scores = cross_val_score(model, X, y, cv=5, scoring='accuracy')9print(f"CV Scores: {scores}")10print(f"Mean: {scores.mean():.4f} (+/- {scores.std()*2:.4f})")1112# Stratified K-Fold (cho classification)13skf = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)14scores = cross_val_score(model, X, y, cv=skf, scoring='f1')15print(f"Stratified CV F1: {scores.mean():.4f} (+/- {scores.std()*2:.4f})")4.2 Multiple Metrics

1from sklearn.model_selection import cross_validate23scoring = ['accuracy', 'precision', 'recall', 'f1']4cv_results = cross_validate(model, X, y, cv=5, scoring=scoring)56for metric in scoring:7 scores = cv_results[f'test_{metric}']8 print(f"{metric}: {scores.mean():.4f} (+/- {scores.std()*2:.4f})")Checkpoint

Bạn đã thử chạy cross_val_score với dữ liệu của riêng bạn chưa?

⚙️ Hyperparameter Tuning

5. Hyperparameter Tuning

5.1 GridSearchCV

Tìm kiếm exhaustive tất cả combinations của hyperparameters.

1from sklearn.model_selection import GridSearchCV2from sklearn.ensemble import RandomForestClassifier34# Định nghĩa parameter grid5param_grid = {6 'n_estìmators': [50, 100, 200],7 'max_depth': [3, 5, 10, None],8 'min_samples_split': [2, 5, 10],9 'min_samples_leaf': [1, 2, 4]10}1112# GridSearchCV13grid_search = GridSearchCV(14 estìmator=RandomForestClassifier(random_state=42),15 param_grid=param_grid,16 cv=5,17 scoring='f1',18 n_jobs=-1, # Parallel processing19 verbose=120)2122grid_search.fit(X_train, y_train)2324# Kết quả25print(f"Best Parameters: {grid_search.best_params_}")26print(f"Best CV Score: {grid_search.best_score_:.4f}")2728# Best model29best_model = grid_search.best_estìmator_5.2 RandomizedSearchCV

Nhanh hon khi parameter space lon.

1from sklearn.model_selection import RandomizedSearchCV2from scipy.stats import randint, uniform34# Định nghĩa distributions5param_dist = {6 'n_estìmators': randint(50, 300),7 'max_depth': randint(3, 20),8 'min_samples_split': randint(2, 20),9 'min_samples_leaf': randint(1, 10),10 'max_features': uniform(0.1, 0.9)11}1213# RandomizedSearchCV14random_search = RandomizedSearchCV(15 estìmator=RandomForestClassifier(random_state=42),16 param_distributions=param_dist,17 n_iter=50, # So lan thu18 cv=5,19 scoring='f1',20 n_jobs=-1,21 random_state=4222)2324random_search.fit(X_train, y_train)25print(f"Best Parameters: {random_search.best_params_}")26print(f"Best CV Score: {random_search.best_score_:.4f}")5.3 So sanh GridSearch vs RandomizedSearch

| Aspect | GridSearchCV | RandomizedSearchCV |

|---|---|---|

| Tim kiem | Exhaustive | Random sampling |

| Toc do | Cham | Nhanh |

| Parameter space lon | Khong kha thi | Kha thi |

| Dam bao tìm tot nhat | Co | Khong chac |

📈 Learning Curves

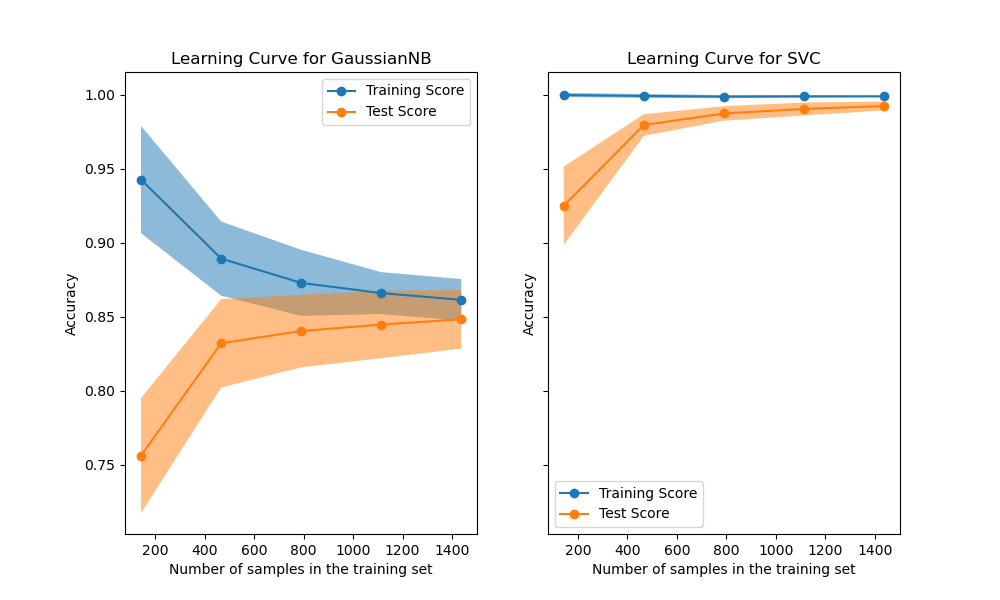

6. Learning Curves

6.1 Phat hien Overfitting/Underfitting

1from sklearn.model_selection import learning_curve2import matplotlib.pyplot as plt34train_sizes, train_scores, val_scores = learning_curve(5 model, X, y,6 cv=5,7 train_sizes=np.linspace(0.1, 1.0, 10),8 scoring='accuracy'9)1011# Tính mean va std12train_mean = train_scores.mean(axis=1)13train_std = train_scores.std(axis=1)14val_mean = val_scores.mean(axis=1)15val_std = val_scores.std(axis=1)1617# Ve Learning Curve18plt.figure(figsize=(10, 6))19plt.plot(train_sizes, train_mean, label='Training score')20plt.fill_between(train_sizes, train_mean - train_std, 21 train_mean + train_std, alpha=0.1)22plt.plot(train_sizes, val_mean, label='Validation score')23plt.fill_between(train_sizes, val_mean - val_std,24 val_mean + val_std, alpha=0.1)25plt.xlabel('Training Size')26plt.ylabel('Score')27plt.title('Learning Curve')28plt.legend()29plt.grid(True)30plt.show()

Hinh: Learning Curve - phat hien overfitting

6.2 Diễn giải Learning Curve

| Pattern | Vấn đề | Giải pháp |

|---|---|---|

| Train cao, Val thap, gap lon | Overfitting | Regularization, more data |

| Ca hai thap | Underfitting | Complex model, more features |

| Ca hai cao, gap nho | Good fit | OK! |

✅ Best Practices

7. Best Practices

7.1 Pipeline hoan chinh

1from sklearn.pipeline import Pipeline2from sklearn.preprocessing import StandardScaler3from sklearn.model_selection import GridSearchCV45# Tao pipeline6pipeline = Pipeline([7 ('scaler', StandardScaler()),8 ('classifier', RandomForestClassifier(random_state=42))9])1011# Parameter grid voi pipeline12param_grid = {13 'classifier__n_estìmators': [50, 100],14 'classifier__max_depth': [3, 5, 10]15}1617# GridSearchCV voi pipeline18grid_search = GridSearchCV(pipeline, param_grid, cv=5, scoring='f1')19grid_search.fit(X_train, y_train)7.2 Tips quan trong

| Tip | Mô tả |

|---|---|

| Luon dung CV | Thay vi single train/test split |

| Stratified | Cho classification |

| Pipeline | Tranh data leakage |

| RandomizedSearch truoc | Truoc khi GridSearch |

| Test set | Chi dung cuoi cung |

📝 Tổng Kết

Key Takeaways:

- 🔄 Cross-Validation giúp đánh giá model khách quan hơn train/test split

- 📊 K-Fold CV (k=5 hoặc 10) là lựa chọn phổ biến nhất

- ⚖️ Bias-Variance Tradeoff: Underfitting (đơn giản) vs Overfitting (phức tạp)

- 🔍 GridSearchCV thữ tất cả tổ hợp, RandomizedSearchCV nhanh hơn

- 💻 Scikit-learn:

cross_val_score(),GridSearchCV(),learning_curve()

Bài tập tự luyện

- Bài tập 1: Implement 5-Fold CV từ đầu (không dùng sklearn)

- Bài tập 2: So sánh GridSearchCV và RandomizedSearchCV trên cùng dataset

- Bài tập 3: Vẽ Learning Curve và phân tích overfitting

Tài liệu tham khảo

| Nguồn | Link |

|---|---|

| Scikit-learn Cross-Validation | scikit-learn.org |

| Scikit-learn Grid Search | scikit-learn.org |

| Bias-Variance Tradeoff | towardsdatascience.com |

Câu hỏi tự kiểm tra

- Overfitting và Underfitting khác nhau như thế nào? Làm sao phát hiện bằng Learning Curve?

- K-Fold Cross-Validation hoạt động như thế nào? Tại sao tốt hơn single train/test split?

- Phân biệt GridSearchCV và RandomizedSearchCV — khi nào nên dùng phương pháp nào?

- Bias-Variance Tradeoff là gì? Làm sao để cân bằng giữa bias và variance?

🎉 Tuyệt vời! Bạn đã hoàn thành bài học Cross-Validation và Hyperparameter Tuning!

Tiếp theo: Cùng học Unsupervised Learning — khám phá patterns ẩn trong dữ liệu!

Checkpoint

Bạn đã nắm vững Cross-Validation chưa? Sẵn sàng sang Unsupervised Learning!